Artificial intelligence (AI) research company OpenAI has announced two more models — DALL·E and CLIP — that use a combination of language and images in a way that will make AI better at understanding both words and what they mean.

Open AI has described at length how the two new models work. Instead of recognizing images, DALL·E draws images from words, captions, specifically, while CLIP (Contrastive Language-Image Pre-training) is an image recognition system that has learned to recognize images not from labelled examples in curated data sets but from images and their captions.

DALL·E is a 12-billion parameter version of GPT-3 trained to generate images from text descriptions, using a dataset of text–image pairs.

What Open AI has done is to use GPT-3 and Image GPT to manipulate visual concepts through language by extending its research around Image GPT and GPT-3.

DALL·E receives both, the text and the image, as a single stream of data containing up to 1280 tokens, and is trained using maximum likelihood to generate all of the tokens, one after another, explained Open AI. (A token is any symbol from a discrete vocabulary; for humans, each English letter is a token from a 26-letter alphabet.)

DALL·E’s vocabulary has tokens for both text and image concepts. The training procedure allowed DALL·E to not only generate an image from scratch, but also to regenerate any rectangular region of an existing image that extends to the bottom-right corner, in a way that is consistent with the text prompt.

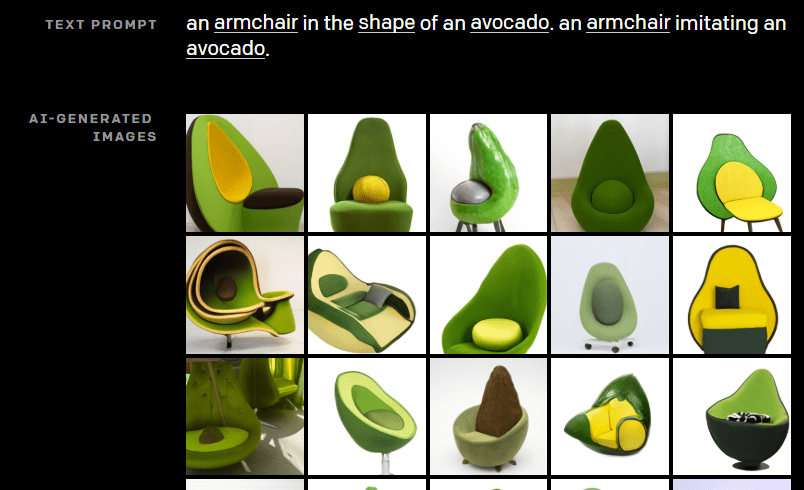

We find that DALL·E is able to create plausible images for a great variety of sentences that explore the compositional structure of language.

Open AI

Here’s a sample:

Photo caption: When generating some of these objects, such as “an armchair in the shape of an avocado”, DALL·E appears to relate the shape of a half avocado to the back of the chair, and the pit of the avocado to the cushion. We find that DALL·E is susceptible to the same kinds of mistakes mentioned in the previous visual.

About CLIP

Open AI said it was also introducing a neural network called CLIP which efficiently learned visual concepts from natural language supervision. CLIP (Contrastive Language–Image Pre-training) could be applied to any visual classification benchmark by simply providing the names of the visual categories to be recognized, similar to the “zero-shot” capabilities of GPT-2 and 3.

CLIP builds on a large body of work on zero-shot transfer, natural language supervision, and multimodal learning.

You may click here to read up on the two models.

Image credit: Open AI