MonikaP / Pixabay

Autonomous vehicles and autonomous vehicle technology is developing at a rapid pace. From Uber to Google, Waymo, Bosch, Mercedes Benz and co. Many European, Japanese and American tech and automobile companies are in the race. The global industry is estimated to reach US $560 billion by 2026 with much more impact on city infrastructure, policy and driving behavior.

Different levels of autonomy require a combination of hardware and software, algorithms and data processing to manage and process commands and actuate controls. Every aspect of the Internet of Things (IoT) is involved in this domain. The IoT experts are already delving into this field but are yet to fully understand and/or scope the value and the role they might to play in the development of this field.

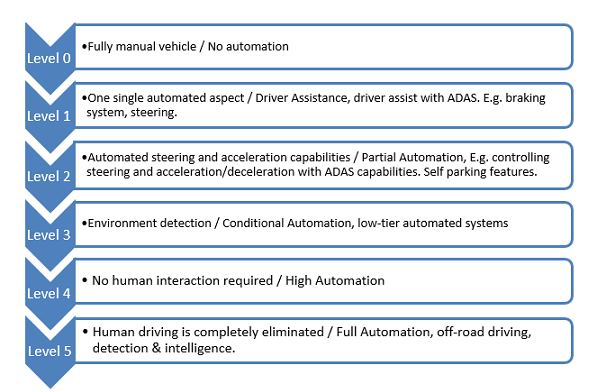

Autonomous Vehicles (AVs) are anticipated to alleviate road congestion through higher throughput, improve road safety by eliminating human error, and free drivers from the burden of driving, allowing greater productivity and/or time for rest along with a myriad of other foreseen benefits. Starting out, the development of autonomous vehicles has varying levels of automation from 0-5.

The table below shows the SAE levels and the autonomous features of each:

Tab 1: Five SAE Levels in the development of AVs

An AV constitutes various levels of autonomy as well as the enabling technologies that all work together to make up for a full autonomy. The implementation layer presents an opportunity to understand the technology components, and roles that IoT experts might play in the development and/or research of AV tech. The technology implementation layers of an AV include perception, localization, planning and control.

Perception: Involves the use of short/long-range radars and ultrasonic sensors for road surface extraction and on-road object detection.

LIDAR – a light detection and ranging device, which sends millions of light pulses per second in a well-designed pattern – is the heart of object detection for most of the existing autonomous vehicles.

Environmental perception refers to developing a contextual understanding of the environment, such as where obstacles are located, detection of road signs/marking, and categorizing data by their semantic meaning. The main opportunity lies in road detection and object recognition.

Experts would come into this field as developers with advanced knowledge on deep learning techniques and machine learning is crucial to developing, simulating and predicting the various vehicle states. The ability to predict the environment, design and train the machine on how to see obstacles, cars ahead, road bumps requires sophisticated algorithm and mathematical calculation. So, knowledge of physics, mathematics and data science generally will be crucial at every stage of development.

Localization: Is the problem of determining the pose of the ego vehicle and measuring its own motion. It is one of the fundamental capabilities that enable autonomous driving. However, it is often difficult and impractical to determine the exact pose (position and orientation) of the vehicle, and therefore the localization problem is often formulated as a pose estimation problem. One of the most popular ways of localizing a vehicle is the fusion of satellite-based navigation systems and inertial navigation systems. Satellite navigation systems, such as GPS and GLONASS. Inertial navigation systems, which use accelerometer, gyroscope, and signal processing techniques to estimate the attitude of the vehicle, do not require external infrastructure. Localization requires skills in auto engineering, embedded software development with knowledge of satellite communication, telemetry and navigation.

Planning: Early-stage self-driving vehicles (SDVs) were generally only semi-autonomous in nature, since their designed functionality was typically limited to performing lane following, adaptive cruise control, and other basic functions. Different forms of planning include: Mission planning which is performed through graph search over a directed graph network which reflects road/path network connectivity. Motion Planning refers to the process of deciding on a sequence of actions to reach a specified goal while avoiding collisions with obstacles. Sampling-Based Planning methods rely on random sampling of continuous spaces, and the generation of a feasible trajectory graph. Planning in Dynamic Environments where uncertainties arise from perception sensor accuracy, localization accuracy, environment changes, and control policy execution. Behavioral Planning and Combinatorial Planning also require advanced engineering and mathematics. For experts on AI, data science analysts, developers with good knowledge of Python, R, Java, Lisp, ProLog and embedded engineers with expertise in robotics and mechatronics.

Control: (Motion control) is the process of converting intentions into actions; its main purpose is to execute the planned intentions by providing necessary inputs to the hardware level that will generate the desired motions. We have Classical Control, Model Predictive Control. Autonomous systems need motion models for planning and prediction purposes. Trajectory Generation and Tracking, Combined Trajectory Generation and Tracking, Separate Trajectory Generation and Tracking, Trajectory Generation, Trajectory Tracking, Geometric Path Tracking, whereas Geometric path tracking algorithms use simple geometric relations to derive steering control laws. These techniques utilize look ahead of distance to measure error ahead of the vehicle. This is suitable for experts in power and analog IC, Soc experts and embedded engineers, machine vision, system designers with interest in robotics and actuation.

Perception refers to the ability of an autonomous system to collect information and extract relevant knowledge from the environment. Localization is the ability of the robot to determine its position with respect to the environment. Planning is the process of making purposeful decisions in order to achieve the robot’s higher order goals, typically to bring the vehicle from a start location to a goal location while avoiding obstacles and optimizing over designed heuristics. Finally, the control competency, refers to the robot’s ability to execute the planned actions that have been generated by the higher level processes.

In the future, we see the autonomous world as an ecosystem offering various mobility applications as a service from way finding to parking solutions, VR entertainment, on road car charging and connected environments. All these technology stacks presents a new opportunity for IoT and embedded engineers and developers to make a dent and contribute to the development and deployment of robust applications and OTT services.

If you are an IoT expert, a developer or entrepreneur looking to learn more about autonomous vehicle technology, check out some introductory and advanced courses on Udemy.

All information/views/opinions expressed in this article are that of the author. This Website may or may not agree with the same.

Charles Ikem is a service designer with expertise in UI/UX, interaction design, data science and digital transformation. Charles has worked for CELT, UK, Centre for Technology Entrepreneurship in Italy as well as in South Africa and Nigeria. He holds a Master’s Degree in Design Management from Birmingham City University, UK and a PhD from University of Padova, Italy where he worked on service design applications to Internet of Things. Charles is currently working as an independent consultant for M4ID based in Helsinki.

Charles Ikem is a service designer with expertise in UI/UX, interaction design, data science and digital transformation. Charles has worked for CELT, UK, Centre for Technology Entrepreneurship in Italy as well as in South Africa and Nigeria. He holds a Master’s Degree in Design Management from Birmingham City University, UK and a PhD from University of Padova, Italy where he worked on service design applications to Internet of Things. Charles is currently working as an independent consultant for M4ID based in Helsinki.